Microsoft has released VibeVoice, an open-source text-to-speech framework that can turn plain text into up to 90 minutes of expressive, multi-speaker audio all at once. This means that it can make long conversations, podcasts, or roundtable chats from start to finish. It’s a research-grade release with public checkpoints, practical demos, and a design that focuses on how well the dialogue flows, how naturally it moves, and how consistent the speakers are over time.

What VibeVoice is

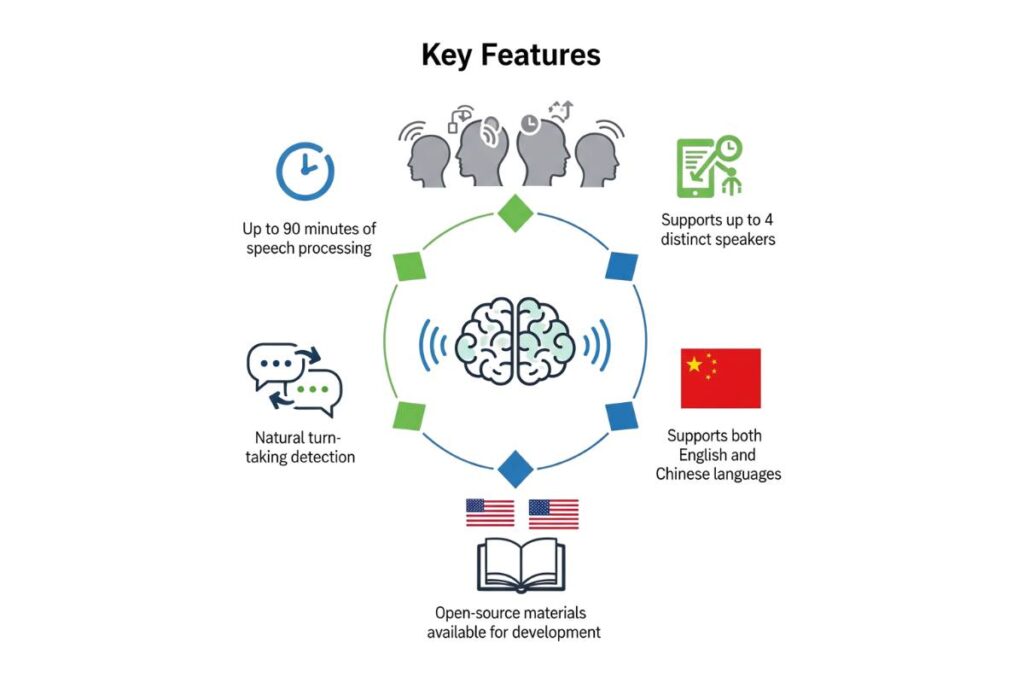

VibeVoice is built for long‑form, multi‑voice storytelling: it turns scripts into coherent, lifelike exchanges where voices take turns, keep their tone, and hold the thread without drifting. In its current form, it supports up to four distinct speakers in one generation run and targets 90‑minute segments for English and Chinese, the two languages covered by its training data. Short, single‑voice clips aren’t the aim here; the system is tuned for sustained dialogue that feels like people actually talking – pausing, handing off, and staying in character.

Why it matters

It’s hard to make speech that lasts for a long time, like 10, 30, or 90 minutes. Models repeat themselves, forget who is speaking, or sound like a single tone. VibeVoice fixes those problems by keeping context for longer, handling turn-taking smoothly, and giving expressive detail that doesn’t fade after a few minutes. That matters for making prototypes of podcasts, mock panel interviews, training materials, and accessibility use cases where realistic, multi-speaker output without manual stitching can save hours and open up new creative ways to work.

Key capabilities

- Up to 90 minutes of speech per run, with as many as four distinct speakers in a single track.

- Natural turn‑taking rather than overlapping speech, so everything stays clear and easy to edit.

- English and Chinese support, including demos that showcase emotion, cross‑lingual narration, and controlled singing in limited scenarios.

- Open‑source checkpoints and materials that enable local runs or hosted trials, depending on hardware and patience for queues.

Under the hood

Here’s the interesting bit: VibeVoice pairs a lightweight Large Language Model (LLM) with a diffusion‑based acoustic head. The LLM organizes content, timing, and speaker turns; the diffusion module paints the sound details – prosody, intonation, breath. Two continuous speech tokenizers – Acoustic and Semantic – operate at a low frame rate (7.5 Hz), compressing audio into compact features that the model can handle over long contexts without losing richness.

The backbone in the available release is Qwen2.5‑1.5B, guided via a long‑context curriculum (up to 65,536 tokens) to keep dialogue coherent across extended spans. A ~123M‑parameter diffusion head then synthesizes acoustic VAE features conditioned on the LLM’s plan, using techniques like classifier‑free guidance and DPM‑Solver to deliver crisp, intelligible speech. Put simply: the LLM “decides what to say and when,” the diffusion head “decides how it should sound.”

A quick mental model

“Imagine you’ve got a script for a two‑host show,” a producer might say. “You don’t want it to sound like a robot reading a transcript. You want it to breathe: ‘Uh‑huh… right, and then – ’ and keep the energy.” That’s where the LLM’s long‑context planning meets the diffusion head’s expressive detail. It’s not just the words; it’s the vibe, the rhythm, the feel.

Model lineup

Microsoft outlines three configurations designed for different needs. One is geared toward low‑latency streaming (0.5B, coming soon), one is the current workhorse (1.5B, available now), and one is a higher‑capacity preview for quality‑seeking experiments (7B preview).

Model comparison

| Variant | Context window | Generation length | Notes |

| 0.5B (streaming) | Not listed | Not listed | Targeted at real‑time use; planned for low‑latency scenarios. |

| 1.5B (current) | 64K | ~90 minutes | Available today; optimized for long‑form, multi‑speaker dialogue. |

| 7B (preview) | 32K | ~45 minutes | Higher capacity, preview state; aimed at quality with mid‑length runs. |

Hardware needs and performance

Guidance from community testing and coverage suggests the 1.5B model is feasible on prosumer hardware, often around 7–8 GB of VRAM for multi‑speaker dialogue with conservative settings. The 7B preview may need closer to 18 GB of VRAM for comfortable inference at speed, so it’s more of a “quality experiments on beefier GPUs” option. The upshot: the 1.5B checkpoint is approachable for many creators with common 8 GB cards, especially when batch size and decoding parameters are tuned.

Demos and language support

Project demos highlight spontaneous emotion, cross‑lingual transfers between Mandarin and English, extended conversational segments, and controlled singing. Still, the scope is explicit: training data covers English and Chinese; other languages are out of range for now and may produce unpredictable or even offensive results. Also, the models are speech‑only – no background music, Foley, or ambience – so any production mix will need external sound design.

Safety, disclaimers, and provenance

Use policies prohibit voice impersonation without consent, disinformation, authentication bypass, and other misuse. To reinforce transparency, every output includes an audible disclaimer (“This segment was generated by AI”), plus an imperceptible watermark for later verification. The team also indicates it logs hashed inference requests for abuse monitoring, with plans for aggregated reporting. Licensing is permissive for research and development, but the guidance is conservative: test thoroughly before commercial deployment.

What builders can make?

Think rapid‑prototype podcasts, panel simulations, interview mockups, and language‑learning dialogues – projects where natural turn‑taking and consistent voices matter. Because output is speech‑only and non‑overlapping, editors get clean tracks that slot neatly into DAWs, with music and ambience layered in post. That separation helps maintain clarity, provenance, and control over the final sound.

How to try it

The release includes checkpoints and setup instructions for local runs and mentions hosted experiences that may involve queues. A pragmatic path: start with the 1.5B model on an 8 GB GPU to validate the workflow, then step up to the 7B preview if memory budgets and quality targets line up. Use the provided demos to calibrate expectations around expressiveness, cross‑lingual behavior, and long‑form stability.

Limits to keep in mind

- Language scope: English and Chinese only at this stage; other languages are out of scope and may behave unpredictably.

- Turn‑taking only: no overlapping speech, by design, to keep clarity and editing simple.

- Speech‑only: no music, ambience, or Foley – add those layers downstream.

- Not a real‑time voice converter: a streaming‑oriented 0.5B is planned for low‑latency needs.

Early reception and community vibe

Coverage has leaned into the wow factor – 90‑minute multi‑speaker generation, accessible checkpoints, try‑it‑now demos – balanced with grounded notes about VRAM needs, early singing artifacts, and the usual “this is still research” common sense. The tone, broadly speaking: cautiously enthusiastic. It’s impressive and usable, but also evolving, which is exactly how a research‑first release should grow.

The research context

Two technical choices stand out. First, the use of continuous Acoustic and Semantic tokenizers at 7.5 Hz, which aggressively compress audio while preserving detail, letting the LLM operate efficiently across long contexts. Second, the long‑context training curriculum (up to 65K tokens) that aims to keep output coherent at length, the zone where many TTS systems historically repeat or drift. The diffusion head then re‑introduces nuance – breath, intonation, pacing – without losing the semantic plan.

Roadmap signals

The lineup hints at near‑term goals: a lighter streaming model for live or low‑latency scenarios, the current 1.5B “sweet spot” for long‑form generation, and a 7B preview aimed at higher fidelity over roughly half the duration. If the streaming path lands well, it could power live assistants and interactive experiences that benefit from natural turn‑taking without heavy lag.

Practical evaluation tips

- Scale gradually: begin with 10–20 minute scripts, check for stability, repetition, and speaker consistency, then push toward 90 minutes.

- Test both languages if relevant: cross‑lingual performance depends on prompts and content type; lean on demos to set expectations.

- Budget VRAM headroom: the 1.5B model favors length and efficiency, while the 7B preview trades some duration for capacity and potential quality.

A quick aside from the studio

A sound editor might say, “Okay, so you have four speakers.” “You don’t want chaos; you want clean lanes where no one steps on each other’s lines.” That’s why speech that doesn’t overlap is a good thing, not a bad thing. It keeps the mix clear and lets you add intentional overlaps later on if that’s what you want to do.

Bottom line

VibeVoice brings something rare to open‑source TTS: credible long‑form, multi‑speaker speech that holds together for the length of an episode, not just a clip. The language scope is limited at present, and overlapping dialogue or real-time translation is not the current objective; however, the architectural decisions—long context, diffusion synthesis, continuous tokenization—indicate a deliberate, research-oriented trajectory ahead. It encourages builders and researchers to figure out what makes a synthetic conversation feel conversational and to do so in public.

Specs snapshot

- Backbone: Qwen2.5‑1.5B with a diffusion‑based acoustic head; continuous Acoustic and Semantic tokenizers at 7.5 Hz.

- Context length: up to 65,536 tokens in the 1.5B release; 7B preview at 32K.

- Output duration: roughly ~90 minutes (1.5B) and ~45 minutes (7B preview) per run, with up to four speakers.

- Languages: English and Chinese training; demos include emotion, cross‑lingual narration, and controlled singing.

- Safety: audible AI disclaimer in each output; imperceptible watermark for provenance; hashed logs to monitor abuse patterns.

- Availability: open checkpoints for research and development; local setup recommended; hosted trials may queue; streaming‑oriented 0.5B planned.